International white supremacist groups remain online, spreading same conspiracy theory that inspired New Zealand attack

Neo-Nazi groups have been allowed to remain on Facebook because the social media giant found they did not violate its “community standards”, it has been revealed.

Pages operated by factions of international white supremacist organisations including Combat 18 and the Misanthropic Division were reported, but Facebook refused to remove the content and told researchers to unfollow pages if they found them “offensive”.

A Counter Extremism Project report, seen exclusively by The Independent, showed the same response was received for chapters of Be Active Front USA, a racist skinhead group, and the neo-Nazi British Movement.

Several of the pages unsuccessfully reported included racist and homophobic statements, such as calling non-whites “vermin” and gay people “degenerates”, images of Adolf Hitler and fascist symbols.

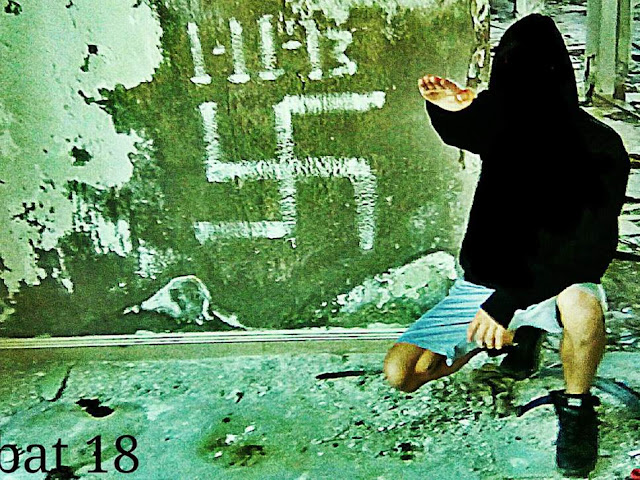

Facebook refused to take down a page used by Combat 18’s Greek wing, despite its cover photo showing a man performing a Nazi salute, in front of a wall sprayed with a swastika.

Another page for Combat 18’s Australian faction complained that after the New Zealand terror attack, the “media and leftists would carry on for months”, while spreading the same ideology that inspired the shooter.

Originating in the UK as a neo-Nazi street-fighting group, Combat 18 has units in dozens of countries and has been suspected in the murders of immigrants and ethnic minorities.

Others pages left online were being used to sell neo-Nazi merchandise and music, which generates funding for extremists.

Hans-Jakob Schindler, senior director of the Counter Extremism Project (CEP), said Facebook and other platforms were allowing hate groups to “network and build echo chambers worldwide”.

“Facebook services a third of the world’s population [2.32 billion monthly active users[, it’s the biggest platform there is,” he added.

“But the company’s business model is content on the platform, not content off the platform, [so] unless there is clear, sustained public pressure on the right-wing extremism issue, we will not see significant progress.”

Mr Schindler called for legal regulation, as the British government prepares to publish a white paper on tackling “online harms”.

The fresh controversy comes after Facebook was condemned for failing to stop the New Zealand attacker’s livestream until it was reported by police.

Days later, The Independent revealed that anti-Islam group Britain First had set up three new pages a year after being banned for hate speech.

Mr Schindler said that although Facebook and other social media firms have dramatically improved their response to Isis propaganda and other Islamist material, the response to far-right extremism was lagging behind.

“Terrorism is terrorism, and Christchurch unfortunately proved our point that both sides of the equation are equally concerning and equally dangerous,” he warned.

“In the west, we have right-wing nationalist extremist groups who feel encouraged at the moment to take action.”

The alleged Christchurch shooter published an online document that said he got his views from “the internet, of course”, and the white genocide conspiracy theory cited as his main justification was being spread by several Facebook pages reported by the CEP.

One, a Canadian branch of the Blood and Honour neo-Nazi music network, claimed to condemn the New Zealand terror attack but openly stated its belief in “cultural replacement tactics that are plaguing Western civilization” in a public post.

The page, which remained online on Sunday, called the atrocity a “misguided effort to make a positive change in the world”.

It was one of 40 Facebook pages belonging to right-wing extremist groups and retailers, which were monitored by CEP researchers between September and November last year.

The sample included 22 groups “that promote the ideology of neo-Nazism and white supremacy”, and 18 Facebook stores promoting extremist music and merchandise.

By the end of the period, five of the 40 pages monitored had been taken down for unknown reasons and the remaining 35 had increased their audience by more than 2,300 likes.

When the CEP reported the 35 online pages, Facebook said it would remove six but dismissed reports for others with a generically-worded response that read: “We looked over the page you reported, and though it doesn’t go against one of our specific community standards, we understand that the page or something shared on it may still be offensive to you and others.”

Facebook advised researchers to “avoid things like this” by blocking or unfollowing the pages. Several have subsequently been removed.

One American retailer reported by the CEP was selling T-shirts with the slogan “kill your local drug dealer”, alongside stickers showing antifascists being shot in the head.

Factions of the international neo-Nazi group Green Line Front also remained on Facebook, alongside antisemitic “Christian identity” groups and the US National Alliance Reform and Restoration Group, which calls for supporters to mount a “white revolution … and take back our homeland from the invaders”.

Facebook’s community standards define hate speech as a “direct attack” on characteristics including race, national origin, religion and sexual orientation.

“When neo-Nazi groups that clearly violate the platform’s policies are allowed to maintain pages, it raises serious questions regarding Facebook’s commitment to their own policies,” the CEP’s report concluded.

Facebook figures show it removed 12.4 million pieces of “terrorist content” in six months last year. Around 2.5 million pieces of hate speech were deleted in the first quarter of 2018.

A spokesperson said: “We want Facebook to be a safe place and we will continue to invest in keeping harm, terrorism, and hate speech off the platform.

“Our community standards ban dangerous individuals and organisations, and we have taken down the content that violates this policy. Every day we are working to keep our community safe, and our team is always developing and reviewing our policies.

“We’re also creating a new independent body for people to appeal content decisions and working with governments on regulation in areas where it doesn’t make sense for a private company to set the rules on its own.”

(Source: Independent)

Neo-Nazi groups have been allowed to remain on Facebook because the social media giant found they did not violate its “community standards”, it has been revealed.

Pages operated by factions of international white supremacist organisations including Combat 18 and the Misanthropic Division were reported, but Facebook refused to remove the content and told researchers to unfollow pages if they found them “offensive”.

A Counter Extremism Project report, seen exclusively by The Independent, showed the same response was received for chapters of Be Active Front USA, a racist skinhead group, and the neo-Nazi British Movement.

Several of the pages unsuccessfully reported included racist and homophobic statements, such as calling non-whites “vermin” and gay people “degenerates”, images of Adolf Hitler and fascist symbols.

Facebook refused to take down a page used by Combat 18’s Greek wing, despite its cover photo showing a man performing a Nazi salute, in front of a wall sprayed with a swastika.

| |

| The cover photo on the Facebook page for the Greek branch of neo-Nazi group Combat 18, which was reported to Facebook but not removed |

Originating in the UK as a neo-Nazi street-fighting group, Combat 18 has units in dozens of countries and has been suspected in the murders of immigrants and ethnic minorities.

Others pages left online were being used to sell neo-Nazi merchandise and music, which generates funding for extremists.

Hans-Jakob Schindler, senior director of the Counter Extremism Project (CEP), said Facebook and other platforms were allowing hate groups to “network and build echo chambers worldwide”.

“Facebook services a third of the world’s population [2.32 billion monthly active users[, it’s the biggest platform there is,” he added.

“But the company’s business model is content on the platform, not content off the platform, [so] unless there is clear, sustained public pressure on the right-wing extremism issue, we will not see significant progress.”

Mr Schindler called for legal regulation, as the British government prepares to publish a white paper on tackling “online harms”.

The fresh controversy comes after Facebook was condemned for failing to stop the New Zealand attacker’s livestream until it was reported by police.

Days later, The Independent revealed that anti-Islam group Britain First had set up three new pages a year after being banned for hate speech.

Mr Schindler said that although Facebook and other social media firms have dramatically improved their response to Isis propaganda and other Islamist material, the response to far-right extremism was lagging behind.

“Terrorism is terrorism, and Christchurch unfortunately proved our point that both sides of the equation are equally concerning and equally dangerous,” he warned.

“In the west, we have right-wing nationalist extremist groups who feel encouraged at the moment to take action.”

The alleged Christchurch shooter published an online document that said he got his views from “the internet, of course”, and the white genocide conspiracy theory cited as his main justification was being spread by several Facebook pages reported by the CEP.

One, a Canadian branch of the Blood and Honour neo-Nazi music network, claimed to condemn the New Zealand terror attack but openly stated its belief in “cultural replacement tactics that are plaguing Western civilization” in a public post.

The page, which remained online on Sunday, called the atrocity a “misguided effort to make a positive change in the world”.

|

| The Facebook page for the neo-Nazi Blood and Honour Canada group, which was reported to Facebook but not removed |

The sample included 22 groups “that promote the ideology of neo-Nazism and white supremacy”, and 18 Facebook stores promoting extremist music and merchandise.

By the end of the period, five of the 40 pages monitored had been taken down for unknown reasons and the remaining 35 had increased their audience by more than 2,300 likes.

When the CEP reported the 35 online pages, Facebook said it would remove six but dismissed reports for others with a generically-worded response that read: “We looked over the page you reported, and though it doesn’t go against one of our specific community standards, we understand that the page or something shared on it may still be offensive to you and others.”

Facebook advised researchers to “avoid things like this” by blocking or unfollowing the pages. Several have subsequently been removed.

One American retailer reported by the CEP was selling T-shirts with the slogan “kill your local drug dealer”, alongside stickers showing antifascists being shot in the head.

|

| The Facebook page for the Australian branch of neo-Nazi group Combat 18, which was reported to Facebook but not removed |

Facebook’s community standards define hate speech as a “direct attack” on characteristics including race, national origin, religion and sexual orientation.

“When neo-Nazi groups that clearly violate the platform’s policies are allowed to maintain pages, it raises serious questions regarding Facebook’s commitment to their own policies,” the CEP’s report concluded.

Facebook figures show it removed 12.4 million pieces of “terrorist content” in six months last year. Around 2.5 million pieces of hate speech were deleted in the first quarter of 2018.

A spokesperson said: “We want Facebook to be a safe place and we will continue to invest in keeping harm, terrorism, and hate speech off the platform.

“Our community standards ban dangerous individuals and organisations, and we have taken down the content that violates this policy. Every day we are working to keep our community safe, and our team is always developing and reviewing our policies.

“We’re also creating a new independent body for people to appeal content decisions and working with governments on regulation in areas where it doesn’t make sense for a private company to set the rules on its own.”

(Source: Independent)

No comments:

Post a Comment